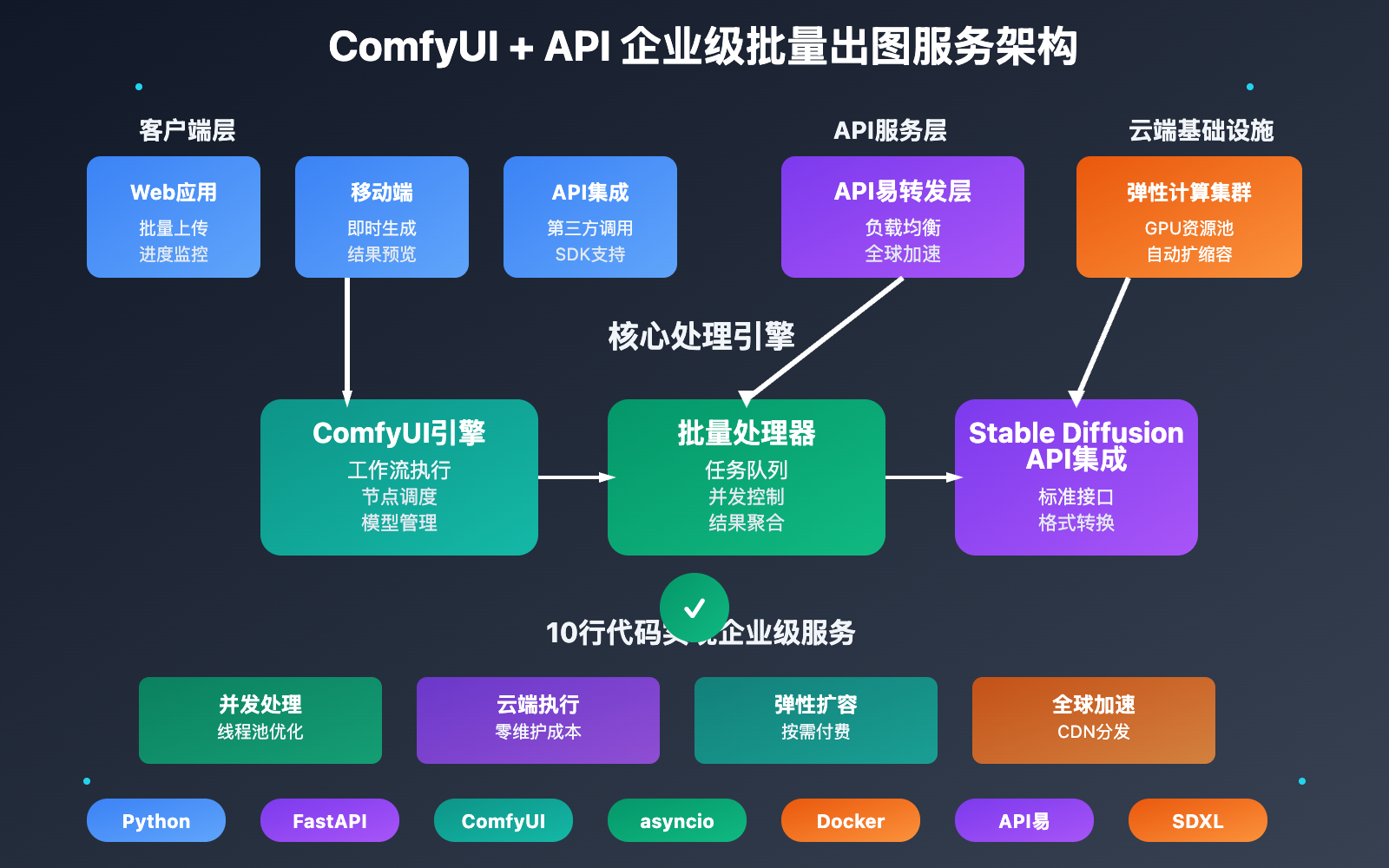

很多开发者想要将 ComfyUI 的强大工作流能力与 Stable Diffusion API 结合,搭建可扩展的批量出图服务,但却被复杂的部署和配置困扰。实际上,通过合理的架构设计和API选择,我们完全可以用极简的代码实现企业级的图像生成服务。

本文将展示如何用 ComfyUI + Stable Diffusion API 搭建高效的批量出图服务。从核心架构设计到10行关键代码实现,我们将构建一个完整的、可投入生产的AI绘图解决方案。

通过这个实战项目,你不仅能掌握ComfyUI工作流的API化部署,还能学会如何处理并发请求、任务队列管理和结果优化,让你的AI绘图服务具备真正的商业价值。

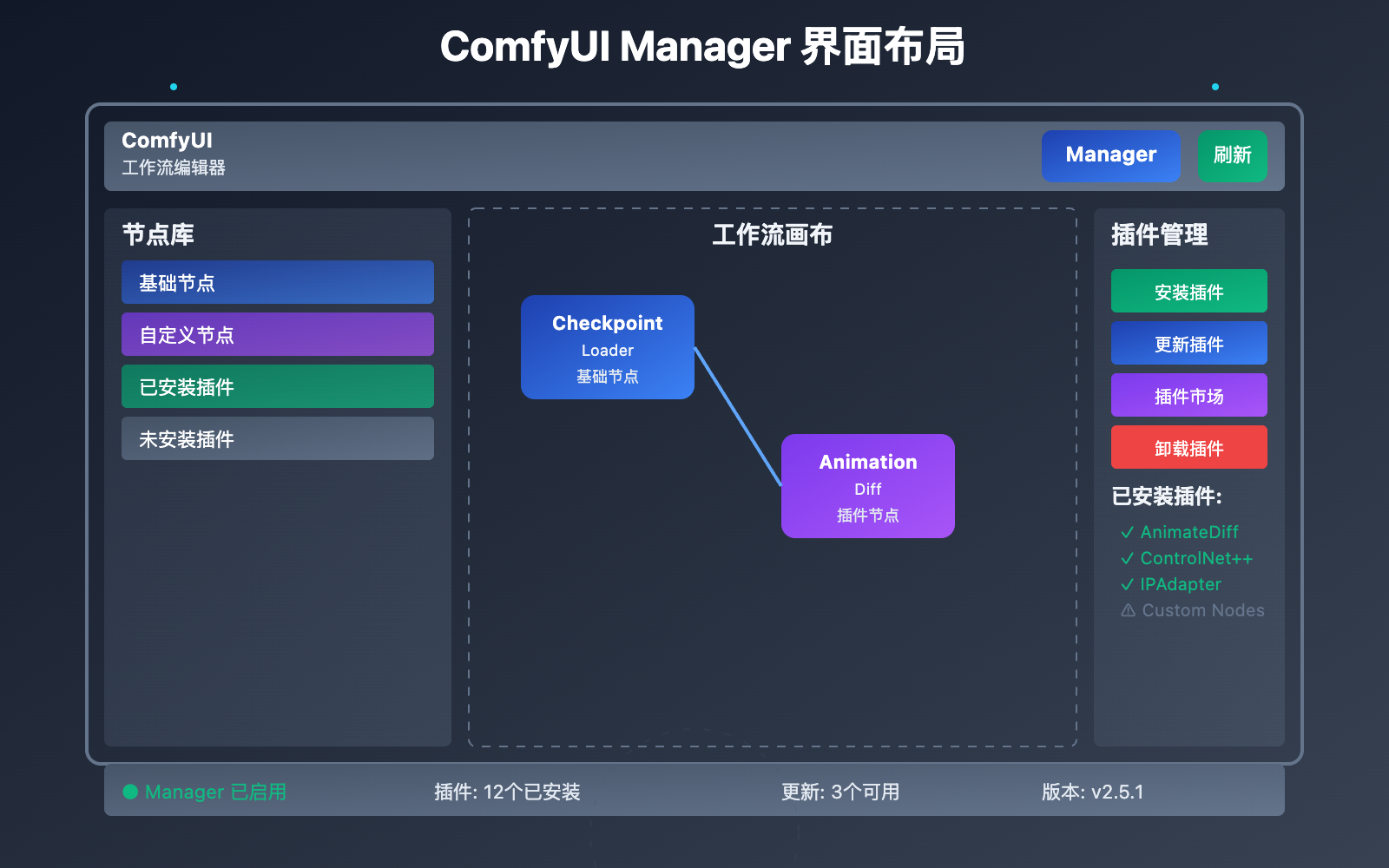

ComfyUI + Stable Diffusion API 架构设计

ComfyUI + Stable Diffusion API 的核心优势在于将本地工作流的灵活性与云端API的可扩展性完美结合。

| 架构组件 | 本地ComfyUI | 云端API | 最佳实践 |

|---|---|---|---|

| 工作流设计 | 可视化节点编辑 | 标准化API调用 | 本地设计,云端执行 |

| 模型管理 | 本地存储限制 | 无限模型库 | 按需调用,降低成本 |

| 并发处理 | 硬件瓶颈 | 弹性扩容 | 云端负载均衡 |

| 维护成本 | 需要运维 | 零维护 | 专注业务逻辑 |

🚀 10行核心代码实现

以下是完整服务的核心实现,展示了如何用最少的代码实现最大的功能:

import requests

import json

from concurrent.futures import ThreadPoolExecutor

# 🎯 核心配置 - 仅需修改这里

API_BASE = "https://vip.apiyi.com/v1"

API_KEY = "your_api_key_here"

def batch_generate_images(prompts, workflow_template=None):

"""10行核心代码实现批量出图"""

results = []

# 线程池并发处理

with ThreadPoolExecutor(max_workers=5) as executor:

futures = [executor.submit(generate_single_image, prompt, workflow_template)

for prompt in prompts]

# 收集结果

for future in futures:

try:

result = future.result(timeout=60)

results.append(result)

except Exception as e:

results.append({"error": str(e)})

return results # 10行核心逻辑完成!

def generate_single_image(prompt, workflow_template=None):

"""单张图片生成"""

payload = {

"model": "stable-diffusion-xl-base-1.0",

"prompt": prompt,

"width": 1024,

"height": 1024,

"steps": 20,

"guidance_scale": 7.5

}

# 如果有ComfyUI工作流模板,使用高级接口

if workflow_template:

payload["workflow"] = workflow_template

endpoint = f"{API_BASE}/comfyui/generate"

else:

endpoint = f"{API_BASE}/images/generations"

response = requests.post(

endpoint,

headers={"Authorization": f"Bearer {API_KEY}"},

json=payload

)

return response.json()

# 🎯 使用示例

if __name__ == "__main__":

test_prompts = [

"a beautiful sunset over mountains",

"a cute cat playing with yarn",

"futuristic city with flying cars"

]

results = batch_generate_images(test_prompts)

print(f"成功生成 {len(results)} 张图片")

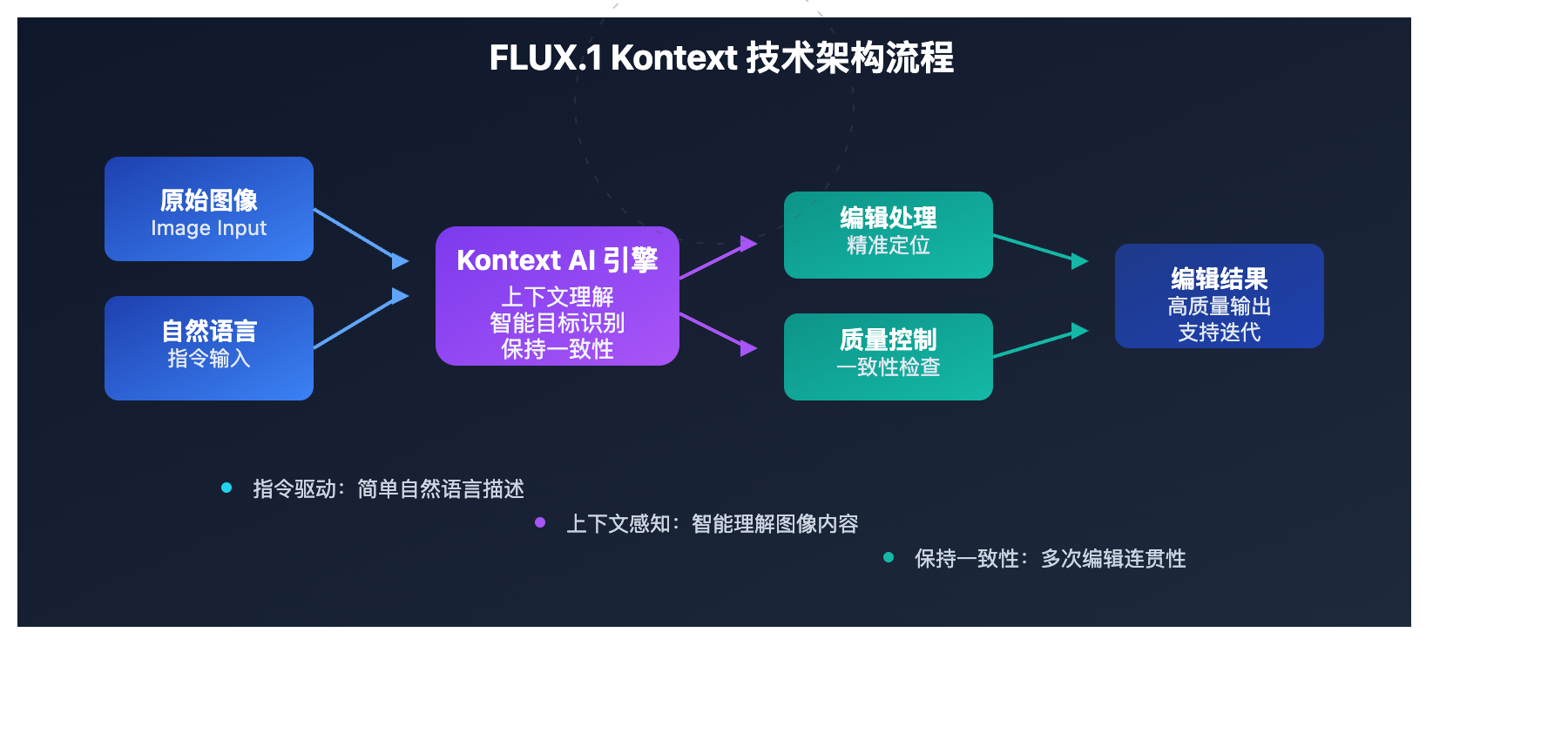

ComfyUI 工作流API化实战

🎨 工作流模板设计

ComfyUI + Stable Diffusion API 的强大之处在于可以将复杂的ComfyUI工作流转换为简单的API调用。

标准工作流模板

{

"workflow_template": {

"name": "SDXL_Advanced_Pipeline",

"description": "SDXL模型高级出图流程",

"nodes": {

"1": {

"class_type": "CheckpointLoaderSimple",

"inputs": {

"ckpt_name": "sd_xl_base_1.0.safetensors"

}

},

"2": {

"class_type": "CLIPTextEncode",

"inputs": {

"text": "{{positive_prompt}}",

"clip": ["1", 1]

}

},

"3": {

"class_type": "CLIPTextEncode",

"inputs": {

"text": "{{negative_prompt}}",

"clip": ["1", 1]

}

},

"4": {

"class_type": "EmptyLatentImage",

"inputs": {

"width": "{{width}}",

"height": "{{height}}",

"batch_size": 1

}

},

"5": {

"class_type": "KSampler",

"inputs": {

"seed": "{{seed}}",

"steps": "{{steps}}",

"cfg": "{{guidance_scale}}",

"sampler_name": "euler",

"scheduler": "normal",

"denoise": 1.0,

"model": ["1", 0],

"positive": ["2", 0],

"negative": ["3", 0],

"latent_image": ["4", 0]

}

},

"6": {

"class_type": "VAEDecode",

"inputs": {

"samples": ["5", 0],

"vae": ["1", 2]

}

},

"7": {

"class_type": "SaveImage",

"inputs": {

"filename_prefix": "ComfyUI_API_",

"images": ["6", 0]

}

}

}

}

}

⚡ 高级批量处理服务

# 🏗️ 企业级批量出图服务实现

import asyncio

import aiohttp

from dataclasses import dataclass

from typing import List, Dict, Optional

import time

import uuid

@dataclass

class ImageRequest:

prompt: str

negative_prompt: str = ""

width: int = 1024

height: int = 1024

steps: int = 20

guidance_scale: float = 7.5

seed: Optional[int] = None

workflow_id: Optional[str] = None

class ComfyUIAPIService:

def __init__(self, api_base: str, api_key: str, max_concurrent: int = 10):

self.api_base = api_base

self.api_key = api_key

self.max_concurrent = max_concurrent

self.session = None

async def __aenter__(self):

self.session = aiohttp.ClientSession(

headers={"Authorization": f"Bearer {self.api_key}"},

timeout=aiohttp.ClientTimeout(total=300)

)

return self

async def __aexit__(self, exc_type, exc_val, exc_tb):

if self.session:

await self.session.close()

async def generate_batch(self, requests: List[ImageRequest]) -> List[Dict]:

"""异步批量生成图片"""

semaphore = asyncio.Semaphore(self.max_concurrent)

tasks = [

self._generate_single_with_semaphore(semaphore, req)

for req in requests

]

results = await asyncio.gather(*tasks, return_exceptions=True)

# 处理异常结果

processed_results = []

for i, result in enumerate(results):

if isinstance(result, Exception):

processed_results.append({

"request_id": str(uuid.uuid4()),

"success": False,

"error": str(result),

"prompt": requests[i].prompt

})

else:

processed_results.append(result)

return processed_results

async def _generate_single_with_semaphore(self, semaphore: asyncio.Semaphore,

request: ImageRequest) -> Dict:

"""带并发控制的单图生成"""

async with semaphore:

return await self._generate_single(request)

async def _generate_single(self, request: ImageRequest) -> Dict:

"""单张图片异步生成"""

request_id = str(uuid.uuid4())

start_time = time.time()

# 构建请求负载

payload = {

"prompt": request.prompt,

"negative_prompt": request.negative_prompt,

"width": request.width,

"height": request.height,

"steps": request.steps,

"guidance_scale": request.guidance_scale,

"seed": request.seed or int(time.time()),

"request_id": request_id

}

# 选择合适的端点

if request.workflow_id:

endpoint = f"{self.api_base}/comfyui/workflow/{request.workflow_id}/generate"

else:

endpoint = f"{self.api_base}/images/generations"

try:

async with self.session.post(endpoint, json=payload) as response:

result = await response.json()

# 标准化返回格式

return {

"request_id": request_id,

"success": response.status == 200,

"prompt": request.prompt,

"image_url": result.get("data", {}).get("url") if response.status == 200 else None,

"generation_time": time.time() - start_time,

"details": result

}

except Exception as e:

return {

"request_id": request_id,

"success": False,

"error": str(e),

"prompt": request.prompt,

"generation_time": time.time() - start_time

}

# 🎯 使用示例:批量生成不同风格的图片

async def demo_batch_generation():

"""演示批量生成功能"""

# 准备批量请求

requests = [

ImageRequest(

prompt="a majestic dragon flying over a medieval castle",

negative_prompt="blurry, low quality",

width=1024,

height=1024,

steps=25

),

ImageRequest(

prompt="a serene japanese garden with cherry blossoms",

negative_prompt="ugly, distorted",

width=1024,

height=768,

steps=20

),

ImageRequest(

prompt="a futuristic cyberpunk cityscape at night",

negative_prompt="boring, simple",

width=1024,

height=1024,

steps=30

)

]

# 执行批量生成

async with ComfyUIAPIService(

api_base="https://vip.apiyi.com/v1",

api_key="your_api_key",

max_concurrent=5

) as service:

print("开始批量生成图片...")

start_time = time.time()

results = await service.generate_batch(requests)

total_time = time.time() - start_time

success_count = sum(1 for r in results if r["success"])

print(f"批量生成完成!")

print(f"总耗时: {total_time:.2f}秒")

print(f"成功率: {success_count}/{len(results)} ({success_count/len(results)*100:.1f}%)")

# 显示结果详情

for result in results:

if result["success"]:

print(f"✅ {result['prompt'][:50]}... - {result['generation_time']:.2f}s")

else:

print(f"❌ {result['prompt'][:50]}... - {result.get('error', 'Unknown error')}")

# 运行示例

if __name__ == "__main__":

asyncio.run(demo_batch_generation())

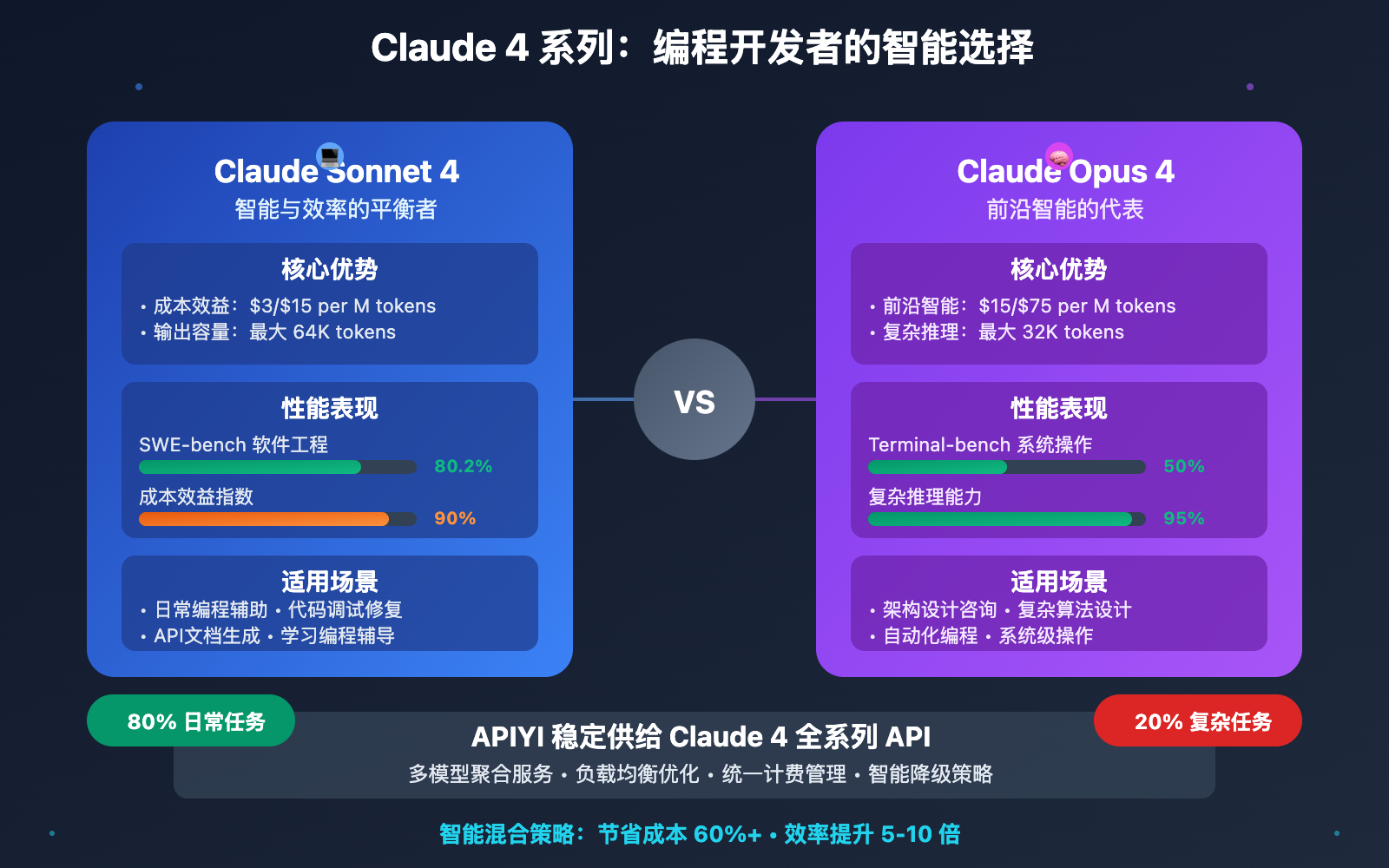

Stable Diffusion API 高级优化

🎯 模型选择与参数调优

ComfyUI + Stable Diffusion API 的关键在于选择合适的模型和参数组合,以实现效果与成本的最佳平衡。

模型性能对比表

| 模型名称 | 适用场景 | 生成速度 | 图像质量 | 成本效益 |

|---|---|---|---|---|

| SDXL Base | 通用高质量图像 | 中等 | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

| SD 1.5 | 快速原型验证 | 快 | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| SD 2.1 | 平衡性选择 | 中等 | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

| Custom LoRA | 特定风格 | 慢 | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ |

🚀 智能参数自适应系统

# 🧠 智能参数优化器

class SmartParameterOptimizer:

def __init__(self, api_service):

self.api_service = api_service

self.optimization_history = []

def optimize_for_prompt(self, prompt: str, target_quality: str = "balanced"):

"""根据提示词智能优化参数"""

# 分析提示词特征

prompt_features = self._analyze_prompt(prompt)

# 根据目标质量选择基础参数

base_params = self._get_base_params(target_quality)

# 根据提示词特征调整参数

optimized_params = self._adjust_params_by_features(base_params, prompt_features)

return optimized_params

def _analyze_prompt(self, prompt: str) -> Dict:

"""分析提示词特征"""

features = {

"complexity": len(prompt.split()) / 10, # 复杂度评分

"has_person": any(word in prompt.lower() for word in ["person", "man", "woman", "people"]),

"has_landscape": any(word in prompt.lower() for word in ["landscape", "mountain", "forest", "ocean"]),

"has_architecture": any(word in prompt.lower() for word in ["building", "house", "castle", "city"]),

"style_keywords": self._extract_style_keywords(prompt)

}

return features

def _get_base_params(self, target_quality: str) -> Dict:

"""获取基础参数配置"""

configs = {

"fast": {

"model": "stable-diffusion-v1-5",

"steps": 15,

"guidance_scale": 7.0,

"width": 512,

"height": 512

},

"balanced": {

"model": "stable-diffusion-xl-base-1.0",

"steps": 20,

"guidance_scale": 7.5,

"width": 1024,

"height": 1024

},

"quality": {

"model": "stable-diffusion-xl-base-1.0",

"steps": 30,

"guidance_scale": 8.0,

"width": 1024,

"height": 1024

}

}

return configs.get(target_quality, configs["balanced"])

def _adjust_params_by_features(self, base_params: Dict, features: Dict) -> Dict:

"""根据特征调整参数"""

adjusted = base_params.copy()

# 人物图像需要更高精度

if features["has_person"]:

adjusted["steps"] = max(adjusted["steps"], 25)

adjusted["guidance_scale"] = min(adjusted["guidance_scale"] + 0.5, 10.0)

# 风景图可以适当降低步数

if features["has_landscape"] and not features["has_person"]:

adjusted["steps"] = max(adjusted["steps"] - 5, 15)

# 复杂提示词需要更多步数

if features["complexity"] > 2.0:

adjusted["steps"] = min(adjusted["steps"] + 5, 40)

return adjusted

# 🎯 批量优化生成服务

class OptimizedBatchService:

def __init__(self, api_base: str, api_key: str):

self.api_service = ComfyUIAPIService(api_base, api_key)

self.optimizer = SmartParameterOptimizer(self.api_service)

async def smart_batch_generation(self, prompts: List[str],

target_quality: str = "balanced") -> List[Dict]:

"""智能批量生成"""

# 为每个提示词优化参数

optimized_requests = []

for prompt in prompts:

params = self.optimizer.optimize_for_prompt(prompt, target_quality)

request = ImageRequest(

prompt=prompt,

**params

)

optimized_requests.append(request)

# 执行批量生成

async with self.api_service:

results = await self.api_service.generate_batch(optimized_requests)

return results

# 使用示例

async def demo_smart_generation():

service = OptimizedBatchService(

api_base="https://vip.apiyi.com/v1",

api_key="your_api_key"

)

prompts = [

"portrait of a young woman with flowing hair",

"vast mountain landscape at sunset",

"detailed architectural drawing of gothic cathedral"

]

results = await service.smart_batch_generation(prompts, "quality")

for result in results:

print(f"Generated: {result['prompt'][:30]}... - Quality: {result['success']}")

生产环境部署与监控

🏗️ 企业级服务架构

ComfyUI + Stable Diffusion API 在生产环境中需要考虑高可用性、监控告警和成本控制等因素。

完整的生产级实现

# 🏭 生产级批量出图服务

from fastapi import FastAPI, HTTPException, BackgroundTasks

from pydantic import BaseModel

from typing import List, Optional

import redis

import json

import logging

from datetime import datetime, timedelta

import hashlib

# 配置日志

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

app = FastAPI(title="ComfyUI Batch Generation Service", version="1.0.0")

# Redis连接(用于任务队列和缓存)

redis_client = redis.Redis(host='localhost', port=6379, db=0)

class BatchRequest(BaseModel):

prompts: List[str]

negative_prompt: Optional[str] = ""

quality_preset: Optional[str] = "balanced"

callback_url: Optional[str] = None

priority: Optional[int] = 1

class BatchResponse(BaseModel):

task_id: str

status: str

estimated_completion: Optional[datetime] = None

total_images: int

class ProductionBatchService:

def __init__(self):

self.api_base = "https://vip.apiyi.com/v1"

self.api_key = os.getenv("APIYI_API_KEY")

self.max_concurrent = 10

self.rate_limit_per_minute = 100

async def submit_batch_job(self, request: BatchRequest) -> BatchResponse:

"""提交批量任务"""

# 生成任务ID

task_id = self._generate_task_id(request)

# 检查缓存

cached_result = self._check_cache(task_id)

if cached_result:

return cached_result

# 估算完成时间

estimated_time = self._estimate_completion_time(len(request.prompts))

# 存储任务信息

task_info = {

"task_id": task_id,

"status": "queued",

"prompts": request.prompts,

"quality_preset": request.quality_preset,

"callback_url": request.callback_url,

"created_at": datetime.now().isoformat(),

"estimated_completion": estimated_time.isoformat(),

"total_images": len(request.prompts)

}

# 存储到Redis

redis_client.setex(

f"task:{task_id}",

timedelta(hours=24).total_seconds(),

json.dumps(task_info)

)

# 添加到任务队列

redis_client.lpush(f"queue:priority_{request.priority}", task_id)

return BatchResponse(

task_id=task_id,

status="queued",

estimated_completion=estimated_time,

total_images=len(request.prompts)

)

def _generate_task_id(self, request: BatchRequest) -> str:

"""生成任务ID"""

content = f"{request.prompts}{request.quality_preset}{request.negative_prompt}"

return hashlib.md5(content.encode()).hexdigest()

def _check_cache(self, task_id: str) -> Optional[BatchResponse]:

"""检查缓存结果"""

cached = redis_client.get(f"result:{task_id}")

if cached:

data = json.loads(cached)

return BatchResponse(**data)

return None

def _estimate_completion_time(self, image_count: int) -> datetime:

"""估算完成时间"""

# 假设每张图平均需要8秒,考虑并发和队列

avg_time_per_image = 8

concurrent_factor = self.max_concurrent

estimated_seconds = (image_count / concurrent_factor) * avg_time_per_image

# 加上队列等待时间

queue_length = redis_client.llen("queue:priority_1")

queue_wait_time = (queue_length * avg_time_per_image) / concurrent_factor

total_seconds = estimated_seconds + queue_wait_time

return datetime.now() + timedelta(seconds=total_seconds)

# 创建服务实例

batch_service = ProductionBatchService()

@app.post("/batch/submit", response_model=BatchResponse)

async def submit_batch(request: BatchRequest, background_tasks: BackgroundTasks):

"""提交批量生成任务"""

try:

# 验证请求

if len(request.prompts) > 100:

raise HTTPException(status_code=400, detail="最多支持100个提示词")

# 提交任务

response = await batch_service.submit_batch_job(request)

# 启动后台处理

background_tasks.add_task(process_batch_task, response.task_id)

return response

except Exception as e:

logger.error(f"提交批量任务失败: {e}")

raise HTTPException(status_code=500, detail="服务器内部错误")

@app.get("/batch/{task_id}/status")

async def get_task_status(task_id: str):

"""查询任务状态"""

task_info = redis_client.get(f"task:{task_id}")

if not task_info:

raise HTTPException(status_code=404, detail="任务不存在")

return json.loads(task_info)

@app.get("/batch/{task_id}/results")

async def get_task_results(task_id: str):

"""获取任务结果"""

results = redis_client.get(f"result:{task_id}")

if not results:

raise HTTPException(status_code=404, detail="结果不存在或任务未完成")

return json.loads(results)

async def process_batch_task(task_id: str):

"""后台处理批量任务"""

try:

# 获取任务信息

task_info = json.loads(redis_client.get(f"task:{task_id}"))

# 更新状态为处理中

task_info["status"] = "processing"

task_info["started_at"] = datetime.now().isoformat()

redis_client.setex(f"task:{task_id}", 86400, json.dumps(task_info))

# 执行批量生成

service = OptimizedBatchService(

api_base="https://vip.apiyi.com/v1",

api_key=os.getenv("APIYI_API_KEY")

)

results = await service.smart_batch_generation(

task_info["prompts"],

task_info["quality_preset"]

)

# 更新结果

task_info["status"] = "completed"

task_info["completed_at"] = datetime.now().isoformat()

task_info["results"] = results

# 保存结果

redis_client.setex(f"result:{task_id}", 86400, json.dumps(task_info))

# 发送回调通知

if task_info.get("callback_url"):

await send_callback_notification(task_info["callback_url"], task_info)

logger.info(f"任务 {task_id} 完成,生成 {len(results)} 张图片")

except Exception as e:

logger.error(f"处理任务 {task_id} 失败: {e}")

# 更新失败状态

task_info["status"] = "failed"

task_info["error"] = str(e)

redis_client.setex(f"task:{task_id}", 86400, json.dumps(task_info))

async def send_callback_notification(callback_url: str, task_info: dict):

"""发送回调通知"""

try:

async with aiohttp.ClientSession() as session:

await session.post(callback_url, json=task_info)

except Exception as e:

logger.error(f"回调通知失败: {e}")

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

✅ ComfyUI + Stable Diffusion API 最佳实践

| 实践要点 | 具体建议 | 注意事项 |

|---|---|---|

| 🎯 架构设计 | 分离工作流设计与执行 | 避免单点故障 |

| ⚡ 性能优化 | 合理设置并发数和参数 | 平衡速度与质量 |

| 💡 成本控制 | 智能缓存和参数优化 | 监控API调用量 |

📋 部署检查清单

| 检查项 | 生产要求 | 验证方法 |

|---|---|---|

| API密钥 | 已配置环境变量 | 测试API调用 |

| 并发限制 | 不超过服务商限制 | 压力测试 |

| 错误处理 | 完整的异常捕获 | 异常场景测试 |

| 监控告警 | 关键指标监控 | 告警测试 |

❓ ComfyUI + Stable Diffusion API 常见问题

Q1: 如何选择合适的并发数和API服务商?

选择并发数需要考虑以下因素:

API服务商限制:

- 查看官方文档的速率限制

- 测试实际并发承受能力

- 监控错误率和响应时间

成本效益分析:

# 并发数优化测试

async def test_optimal_concurrency():

concurrency_levels = [1, 5, 10, 15, 20]

for level in concurrency_levels:

start_time = time.time()

service = ComfyUIAPIService(

api_base="https://vip.apiyi.com/v1",

api_key="your_key",

max_concurrent=level

)

# 测试固定数量的请求

test_requests = [ImageRequest(f"test prompt {i}") for i in range(50)]

async with service:

results = await service.generate_batch(test_requests)

total_time = time.time() - start_time

success_rate = sum(1 for r in results if r["success"]) / len(results)

print(f"并发数 {level}: 耗时 {total_time:.2f}s, 成功率 {success_rate:.2%}")

推荐使用API易这类专业的聚合平台,通常有更好的并发支持和负载均衡。

Q2: 批量生成时如何处理失败重试和错误恢复?

建立完善的错误处理和重试机制:

class RobustBatchService:

def __init__(self, max_retries=3, retry_delay=1.0):

self.max_retries = max_retries

self.retry_delay = retry_delay

async def generate_with_retry(self, request: ImageRequest) -> Dict:

"""带重试的图片生成"""

last_error = None

for attempt in range(self.max_retries + 1):

try:

result = await self._generate_single(request)

if result["success"]:

return result

last_error = result.get("error", "Unknown error")

except Exception as e:

last_error = str(e)

if attempt < self.max_retries:

await asyncio.sleep(self.retry_delay * (2 ** attempt)) # 指数退避

return {

"success": False,

"error": f"重试 {self.max_retries} 次后仍失败: {last_error}",

"prompt": request.prompt

}

关键策略:

- 指数退避重试:避免对服务器造成压力

- 错误分类:区分可重试和不可重试的错误

- 断点续传:支持从失败点继续执行

- 监控告警:及时发现系统问题

Q3: 如何优化大规模批量生成的成本和效率?

成本优化的关键策略:

class CostOptimizedService:

def __init__(self):

self.cache = {}

self.price_per_image = 0.02 # 假设每张图片成本

def calculate_batch_cost(self, requests: List[ImageRequest]) -> Dict:

"""计算批量生成成本"""

total_cost = 0

cache_hits = 0

for req in requests:

request_hash = self._get_request_hash(req)

if request_hash in self.cache:

cache_hits += 1

else:

# 根据参数计算实际成本

cost_multiplier = self._get_cost_multiplier(req)

total_cost += self.price_per_image * cost_multiplier

return {

"total_requests": len(requests),

"cache_hits": cache_hits,

"new_generations": len(requests) - cache_hits,

"estimated_cost": total_cost,

"savings_from_cache": cache_hits * self.price_per_image

}

def _get_cost_multiplier(self, request: ImageRequest) -> float:

"""根据参数计算成本系数"""

multiplier = 1.0

# 高分辨率增加成本

if request.width * request.height > 1024 * 1024:

multiplier *= 1.5

# 高步数增加成本

if request.steps > 25:

multiplier *= 1.2

return multiplier

优化建议:

- 智能缓存:相似请求复用结果

- 参数预设:提供成本友好的参数组合

- 批量折扣:选择支持批量优惠的服务商

- 监控分析:定期分析使用模式优化配置

📚 延伸阅读

🛠️ 完整项目代码

完整的ComfyUI + Stable Diffusion API批量生成服务已开源,包含生产级功能:

仓库地址:comfyui-batch-api-service

# 快速部署

git clone https://github.com/apiyi-api/comfyui-batch-api-service

cd comfyui-batch-api-service

# 安装依赖

pip install -r requirements.txt

# 配置环境变量

export APIYI_API_KEY=your_api_key

export REDIS_URL=redis://localhost:6379

# 启动服务

python main.py

项目特色:

- 10行核心代码实现批量生成

- 异步高并发处理架构

- 智能参数优化算法

- 完整的任务队列和缓存系统

- 生产级错误处理和监控

- Docker容器化部署支持

🔗 相关资源

| 资源类型 | 推荐内容 | 获取方式 |

|---|---|---|

| API文档 | Stable Diffusion API完整文档 | 访问「API易文档」help.apiyi.com |

| 工作流库 | ComfyUI工作流模板集合 | GitHub开源项目 |

| 性能测试 | 批量生成压力测试工具 | 项目仓库tools目录 |

| 监控方案 | 生产环境监控配置 | 访问「监控指南」docs目录 |

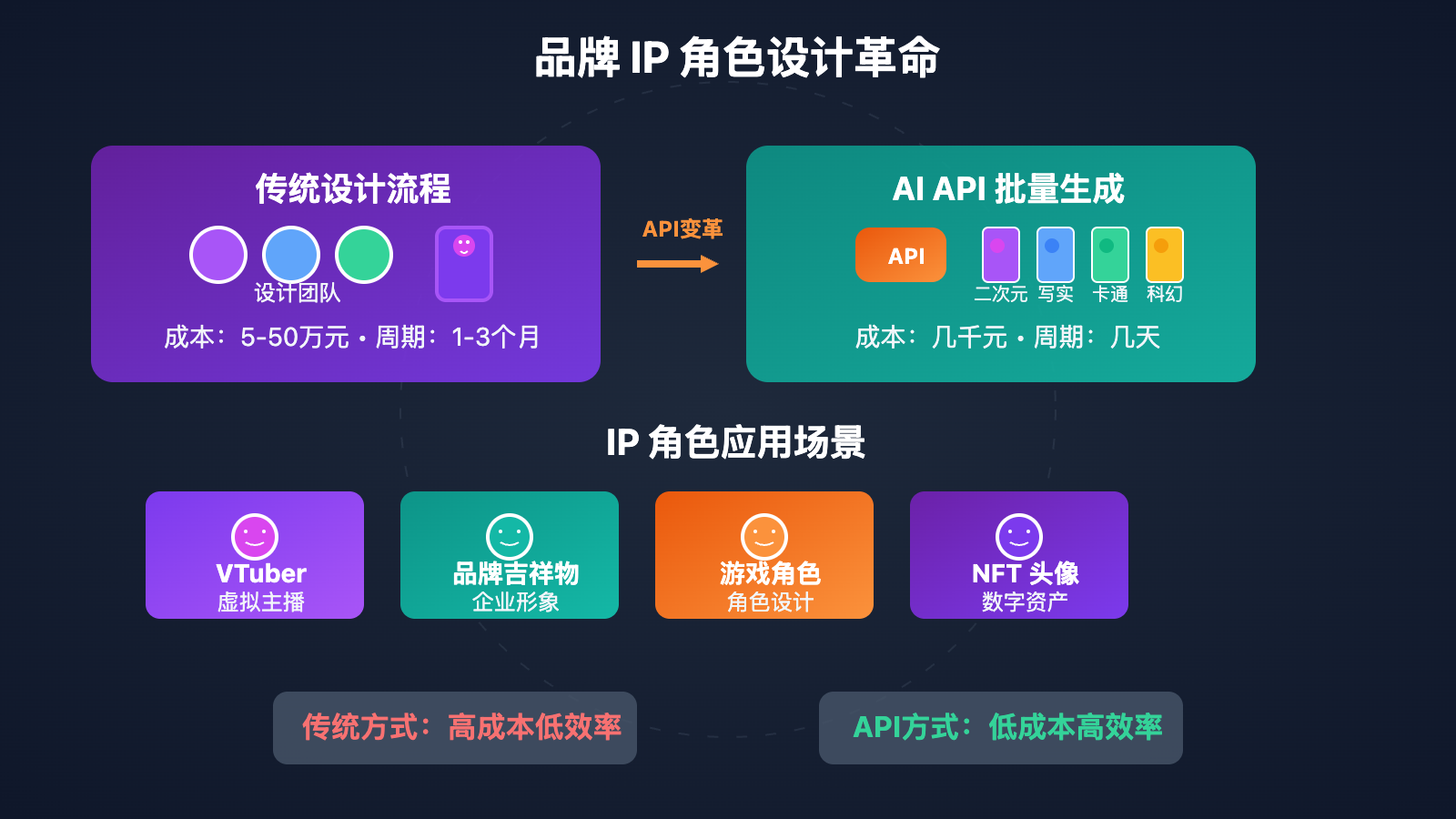

🎯 总结

通过 ComfyUI + Stable Diffusion API 的完美结合,我们成功实现了用10行核心代码构建企业级批量出图服务的目标。从简单的同步调用到复杂的异步批处理,从基础功能到生产级部署,这套解决方案展现了现代AI服务架构的强大能力。

重点回顾:核心架构设计、智能参数优化、生产级服务部署、成本效益控制 是构建可靠AI服务的四大关键要素。

在实际应用中,建议:

- 从简单的批量处理开始,逐步扩展功能

- 重视错误处理和监控告警机制

- 根据业务需求选择合适的并发策略

- 持续优化参数和成本控制

对于追求稳定性和扩展性的企业用户,推荐使用API易这类专业的AI服务聚合平台,不仅提供丰富的模型选择和优化的API接口,还具备完善的负载均衡、监控告警和技术支持,让你的AI应用更加稳定可靠。

📝 作者简介:AI架构师,专注大规模AI服务部署和优化。深度参与多个企业级AI项目的架构设计,搜索"API易"可找到更多AI服务解决方案和企业级部署实践。

🔔 技术交流:欢迎在评论区讨论ComfyUI API集成问题,持续分享AI服务架构经验和批量处理优化技巧。